This is a real-life SEO case study that shows how I scaled a website’s traffic from 500 to 5,000+ in under 24 hours.

In this post, you’ll see exactly how I:

- Fixed crawl and indexing issues with robots.txt

- Removed deadweight pages dragging the site down

- Optimized Meta Titles, Descriptions, and H1s to skyrocket CTR

- Created a simple daily SEO routine that drove rapid results

- And plenty more

If your site is getting traffic but not breaking through, this strategy might be what you’re missing. Let’s dive in!

Client Background & Initial Assessment

The site had:

- 400–500 daily visitors

- Slight weekend spike (up to 600)

- Every Keywords rank on SERP page 1, but low CTR

After accessing the Search Console, I spotted multiple crawl issues and wasted index coverage. Based on the client’s monetization potential, I quoted $700 for the project (with $500 upfront).

Core Technical SEO Fixes: Robots.txt Optimization

User-agent: *

Disallow: /wp-admin/

Disallow: /feed/

Disallow: /tag/

Disallow: /author/

Disallow: /*/page/

Disallow: /search

Disallow: /404

Disallow: /410

Sitemap: https://clientsite.com/sitemap_index.xml

- Only URLs listed in the sitemap are allowed to be crawled.

- All unnecessary directories and dynamically generated pages are blocked to prevent crawl waste.

Googlebot only has so much crawl budget per site. When we block access to junk URLs, we help it focus on the real, money pages. This improves indexing speed and reduces crawl bloat over time.

Disable Feed URLs

Blocked all RSS and Atom feed URLs via robots.txt and redirection.

Feed URLs often get crawled unnecessarily. These duplicate content URLs don’t offer SEO value, so blocking them saves crawl budget and prevents thin-content issues.

Remove Useless Redirects

- Removed plugin-generated redirects for old URLs.

- Removed 301 chains and replaced with final destinations where necessary.

Over time, redirect chains accumulate and slow down the site. Removing old, unused, or broken redirects helps streamline performance and maintain crawl efficiency.

Convert 404 to 410

- Converted all 404 pages into 410 “Gone” errors.

- Ensured robots.txt allows bots to crawl them and deindex.

410 tells Google that the page is permanently removed. This speeds up deindexing compared to 404, which signals temporary absence. We also kept them crawlable so search engines get the update.

Remove Sitemap-External Indexed Pages

- Identified pages indexed outside of sitemap.

- Requested removal through Google Search Console URL Removal tool.

If pages are indexed but not part of your sitemap or strategy, it means Google is crawling content you’re not prioritizing. We removed these to tighten focus on quality pages.

Block Pagination Crawl

- Disallowed /page/ URLs in robots.txt.

- Added meta noindex, follow to paginated archive pages.

Pagination adds duplicate or near-duplicate content across archive pages. Preventing crawl and index ensures those pages don’t dilute SEO equity or create bloat.

Sitemap Refresh Trick

- Deleted sitemap from GSC.

- Re-submitted after 8–10 hours.

This trick often leads to faster re-crawling and index updates. While not backed by Google’s docs, it has proven effective in many campaigns.

Validate “Crawled – Not Indexed”

- Manually submitted URLs stuck in “Crawled – Not Indexed” for reprocessing.

Sometimes, Google crawls a page but doesn’t index it. Manually validating these can nudge Google to reconsider and index pages that were unfairly skipped.

Category & Tag Optimization

- Set category pages to noindex.

- Deleted all tag archives.

- Reviewed and fixed any wrong categories.

Tag and category archives often create duplicate content risks. Noindexing or deleting them helps improve site structure and prevents SEO dilution.

Check Keyword Cannibalization

- Identified overlapping articles targeting same keyword.

- Deleted or drafted duplicates to consolidate relevance.

Too many posts targeting the same keyword can confuse search engines. Cleaning these up boosts the ranking ability of the strongest page on that topic.

Speed Optimization

- Tested Core Web Vitals and page speed.

- Recommended professional optimization where needed.

Speed isn’t just a user-experience factor – it’s a ranking signal. We ensured the site loaded fast, especially on mobile, and deferred heavy fixes to a speed specialist when needed.

Meta Title & Description Overhaul (Daily Routine)

Each day:

- Identified top 10 performing pages via Search Console.

- Extracted 3 high-performing keywords for each page.

- Reviewed top 10 Google results for those keywords.

- Crafted 30 Meta Title + Description variations per page.

- Applied the best combination based on intent, CTR, and competitor gaps.

- Updated H1 headings to align with meta but kept existing content intact.

Instead of changing the content itself, I focused on making each Meta Title and Description a perfect match for what searchers were actually looking for. This small shift alone had a huge impact on click-through rate.

Every day, I picked 10 top-performing pages, studied what was already ranking, and rewrote the meta content accordingly. Within a week, I had improved and updated 70 URLs – and the results spoke for themselves.

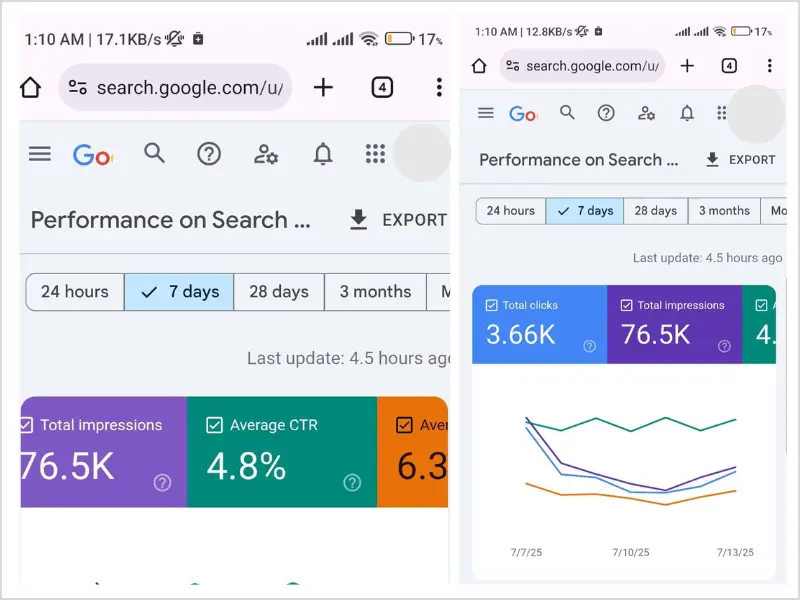

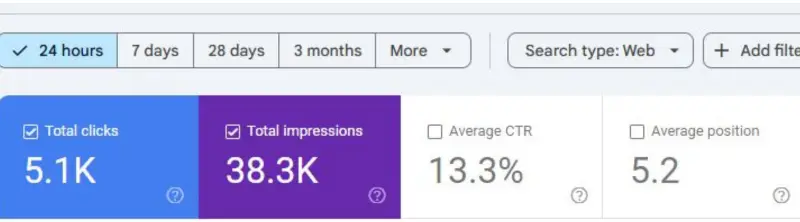

Result: 400 to 4,000+ Visitors Overnight!

At first, we saw a slight dip in traffic as changes rolled out (which is common). But within 24 hours:

- Organic visits spiked from 500 to over 5,000

- Traffic later stabilized at around 2,000/day

The single biggest driver? Meta Title and Description revamp.

This simple change made pages more clickable, improved CTR, and boosted their SERP positions. When search engines see high CTR and engagement, they reward you with better rankings.

Final Word

This SEO case study proves that sometimes, simple fixes make the biggest impact. By cleaning crawl paths, removing low-value pages, and tightening up technical SEO, we built a strong foundation Google could trust and prioritize.

But the real game-changer? Crafting Meta Titles and Descriptions that matched search intent and outperformed competitors on click-worthiness. That alone turned passive impressions into high-volume clicks almost overnight.

Bottom line: If your site already has decent traffic and rankings, don’t chase fancy hacks. Focus on precision, clarity, and relevance. That’s what drives results.

Article Reference:

- Abdul Aouwal (SEO & Web Development expertise to drive success)